IPBench

IPBench

🎉 [2025-04-23]: Our IPBench paper (IPBench: Benchmarking the Knowledge of Large Language Models in Intellectual Property) can be accessed in arXiv!

🔥 [2025-04-17]: We will release our paper and benchmark soon.

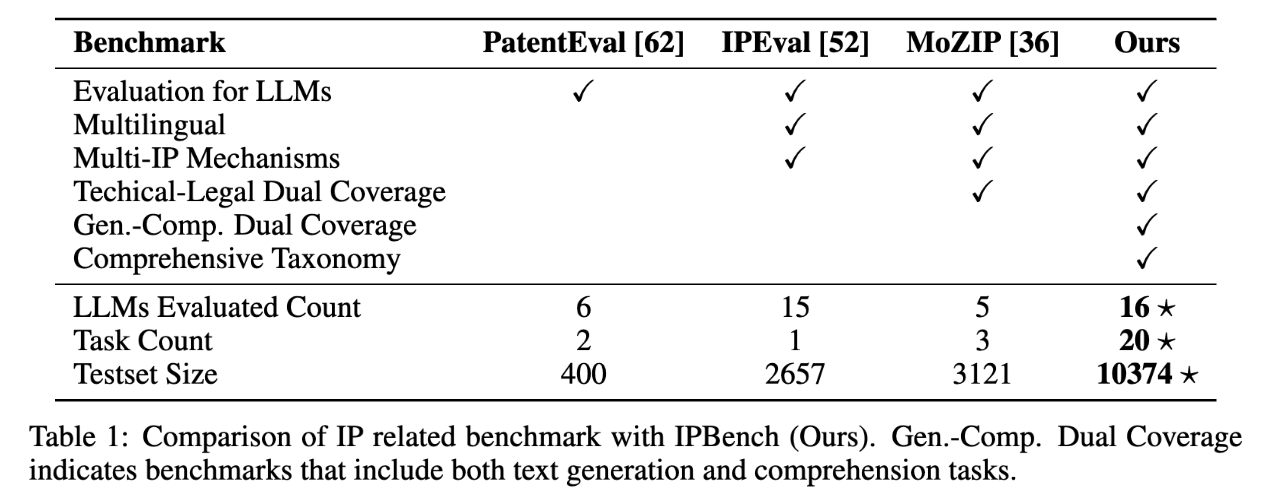

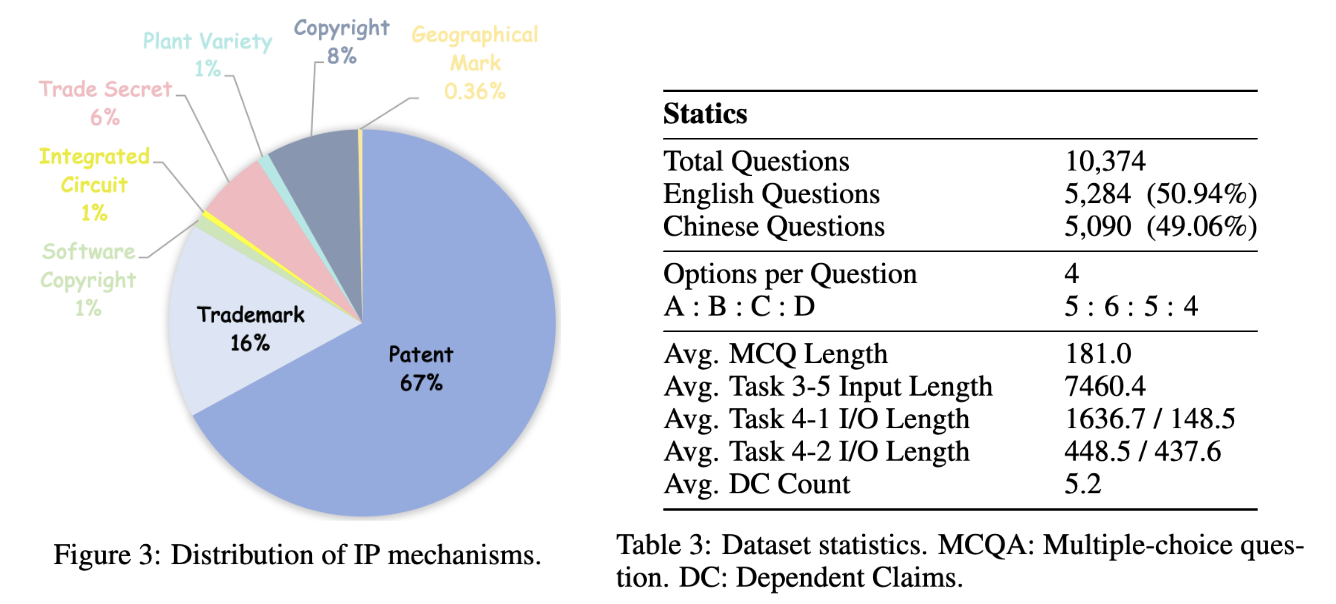

Intellectual property, especially patents, shares similarities with academic papers in that it encapsulates the essence of domain knowledge across various technical fields. However, it is also governed by the intellectual property legal frameworks of different countries and regions. As such, it carries technical, legal, and economic significance, and is closely connected to real-world intellectual property services. In particular, intellectual property data is a rich, multi-modal data type with immense potential for content mining and analysis. Focusing on the field of intellectual property, we propose a comprehensive four-level IP task taxonomy based on the DOK model. Building on this taxonomy, we developed IPBench, a large language model benchmark consisting of 10,374 data instances across 20 tasks and covering 8 types of IP mechanisms. Compared to existing related benchmarks, IPBench features the largest data scale and the most comprehensive task coverage, spanning technical and legal tasks as well as understanding, reasoning, classification, and generation tasks.

Intellectual Property Benchmark

Intellectual Property Benchmark

To bridge the gap between real-world demands and the application of LLMs in the IP field, we introduce the first comprehensive IP task taxonomy. Our taxonomy is based on Webb's Depth of Knowledge (DOK) Theory and is extended to include four hierarchical levels: Information Processing, Logical Reasoning, Discriminant Evaluation, and Creative Generation. It includes an evaluation of models' intrinsic knowledge of IP, along with a detailed analysis of IP text from both point-wise and pairwise perspectives, covering technical and legal aspects.

Building on this taxonomy, we develop IPBench, the first comprehensive Intellectual Property Benchmark for LLMs, consisting of 10,374 data points across 20 tasks aimed at evaluating the knowledge and capabilities of LLMs in real-world IP applications.

This holistic evaluation enables us to gain a hierarchical deep insight into LLMs, assessing their capabilities in in-domain memory, understanding, reasoning, discrimination, and creation across different IP mechanisms. Due to the legal nature of the IP field, there are regional differences between countries. Our IPBench is constrained within the legal frameworks of the United States and mainland China, making it a bilingual benchmark.

We provide a detailed comparison between IPBench and existing benchmarks. These features make our IPBench a most large-scale and task comprehensive benchmark for Large Language Models.

More statics please see our paper.

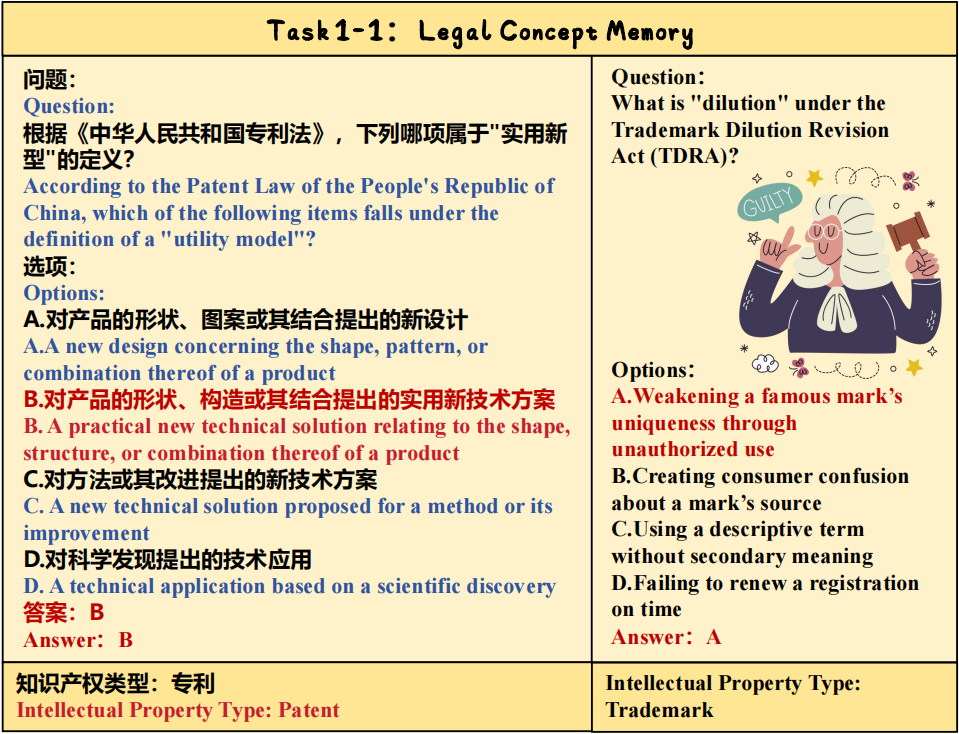

Task example of Task 1-1.

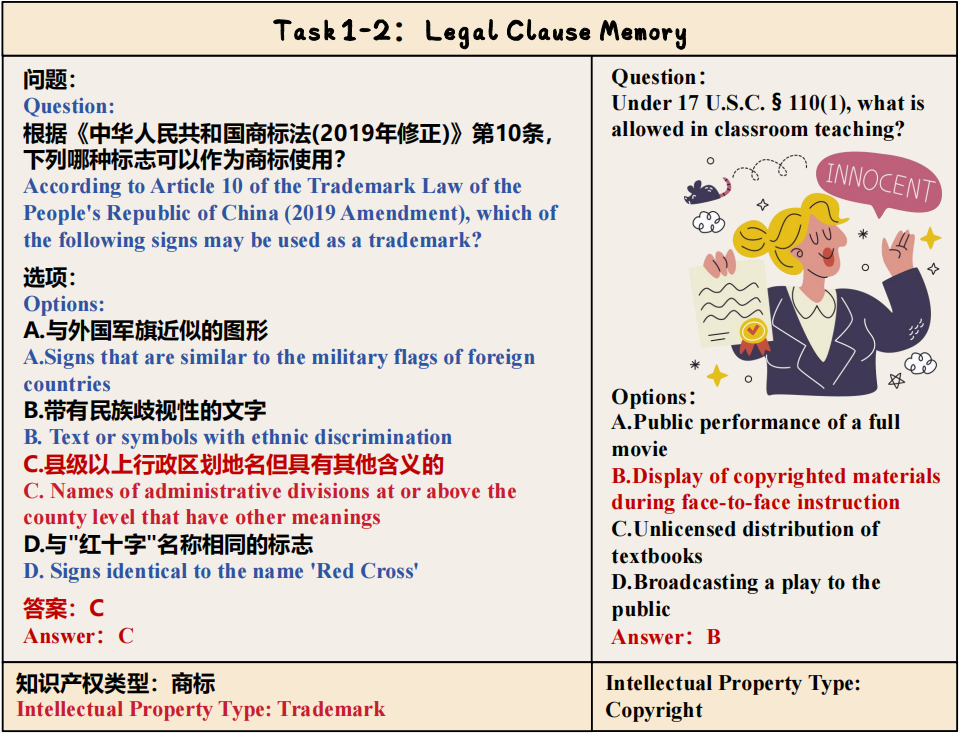

Task example of Task 1-2.

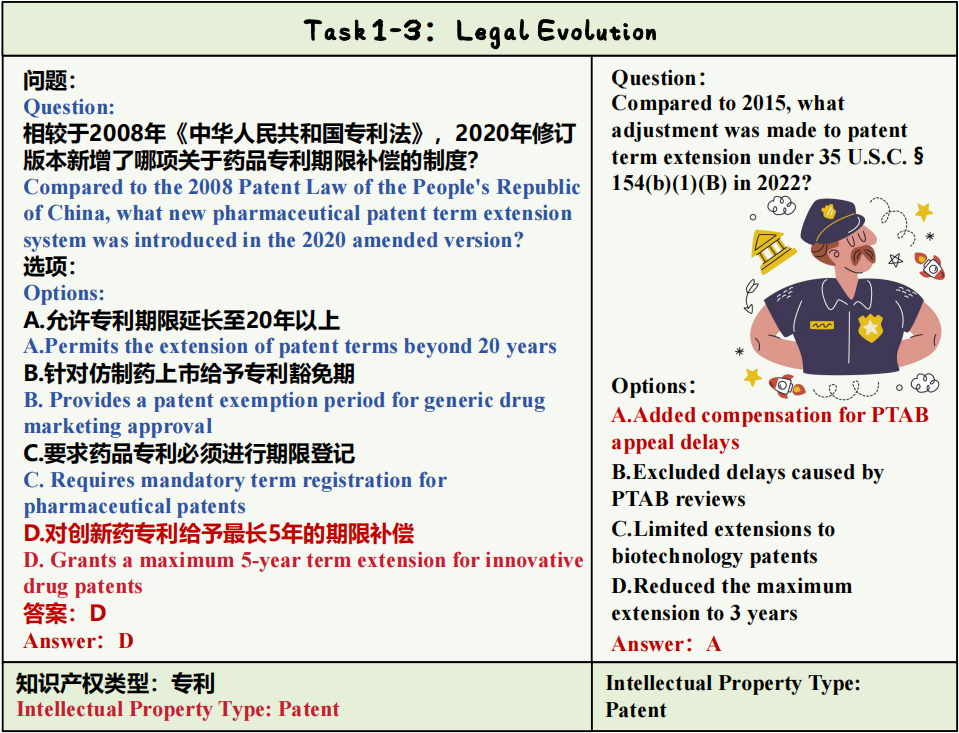

Task example of Task 1-3.

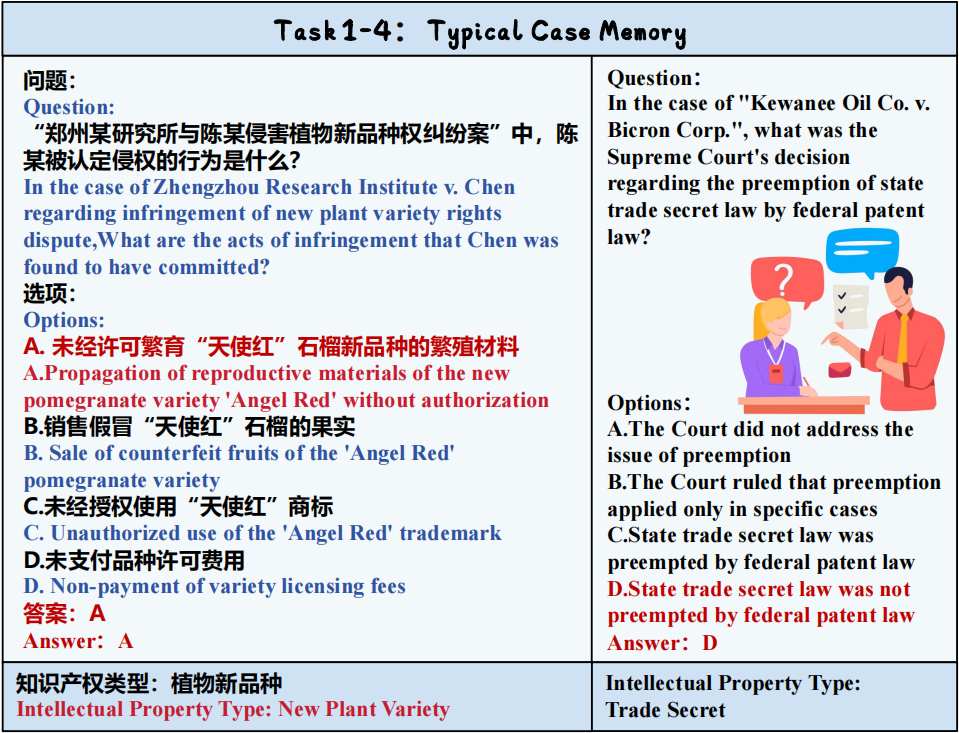

Task example of Task 1-4.

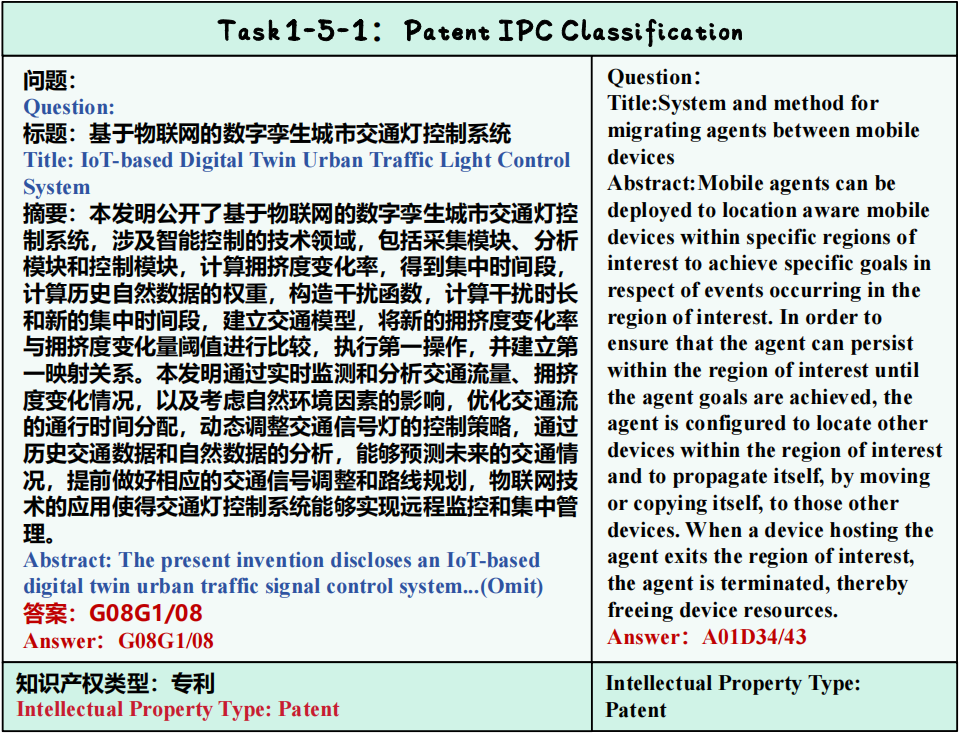

Task example of Task 1-5-1.

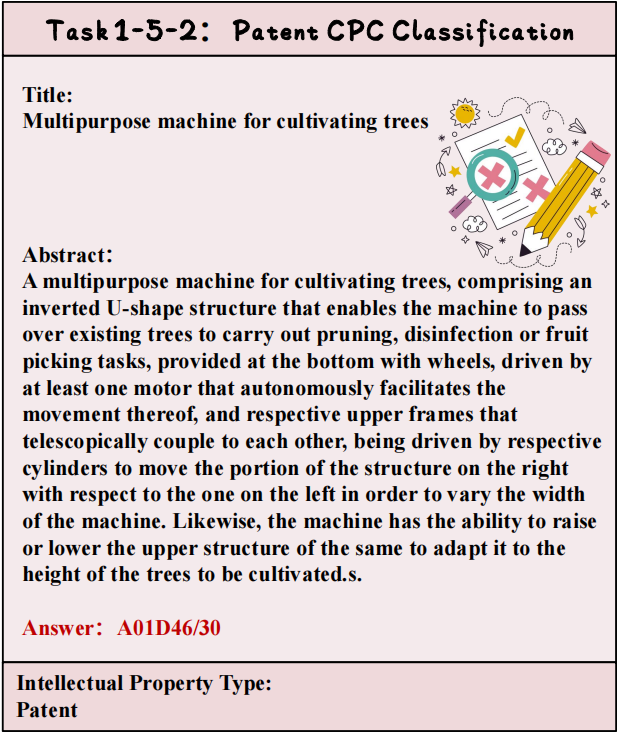

Task example of Task 1-5-2.

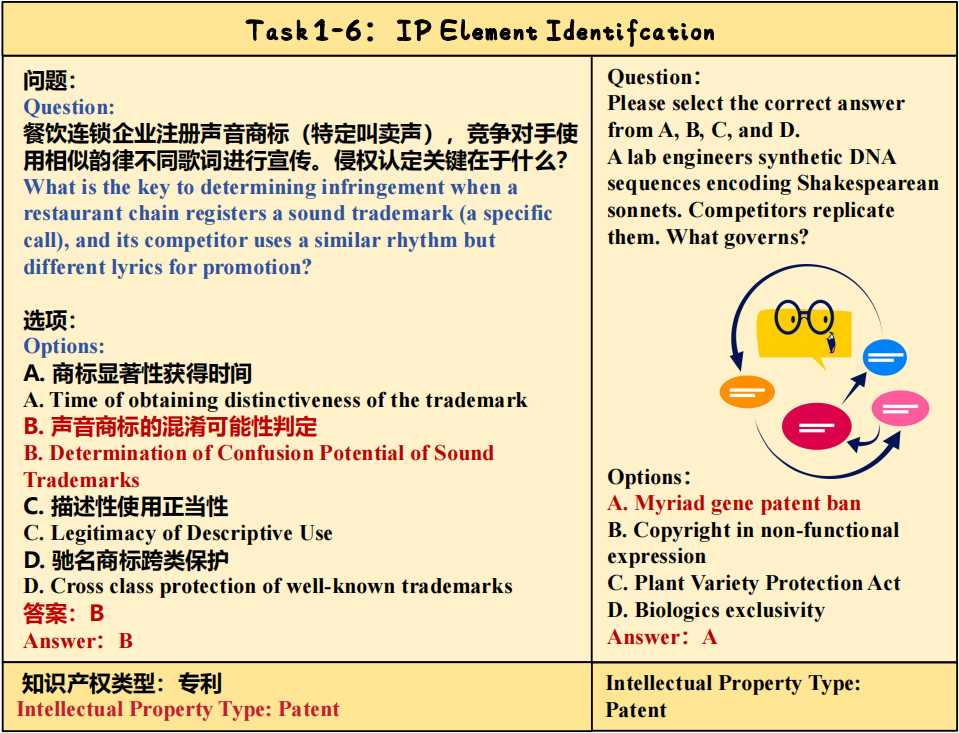

Task example of Task 1-6.

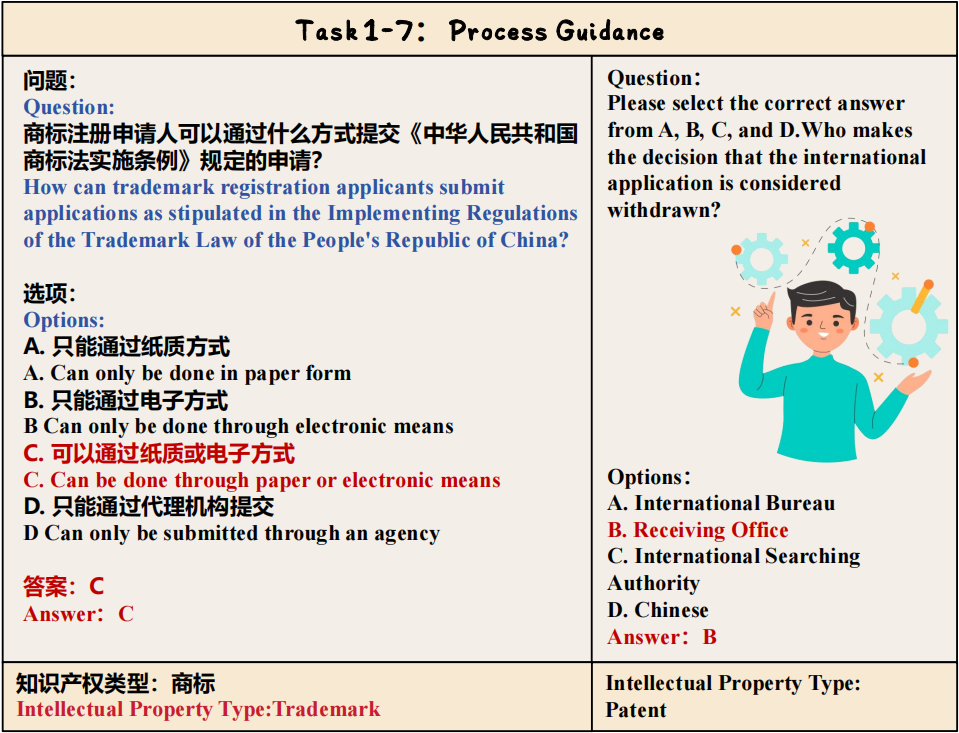

Task example of Task 1-7.

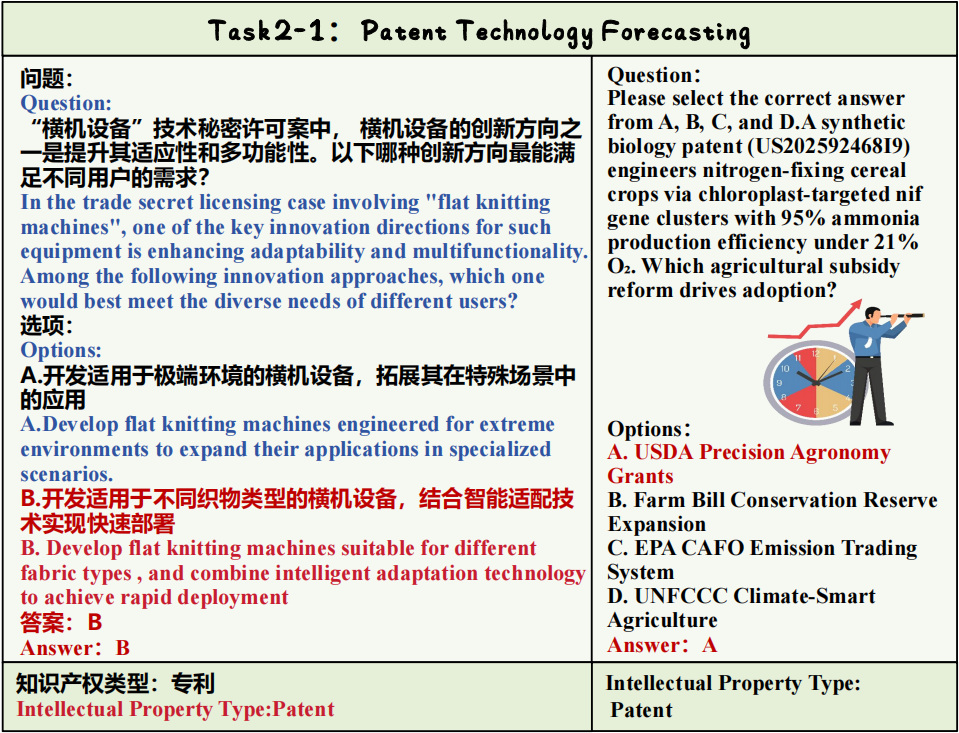

Task example of Task 2-1.

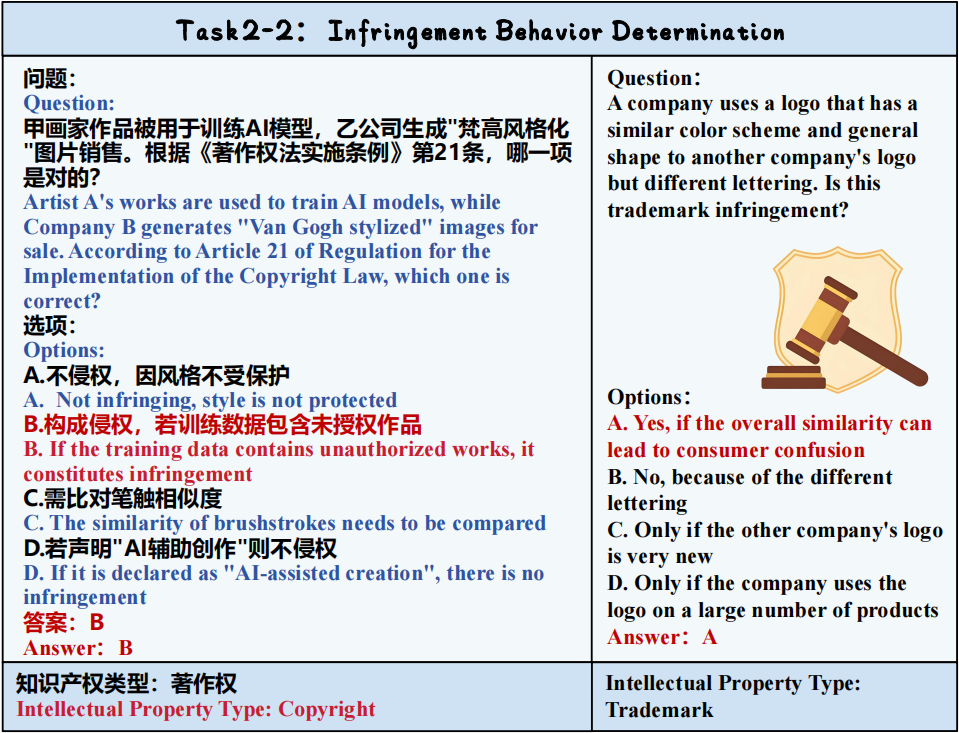

Task example of Task 2-2.

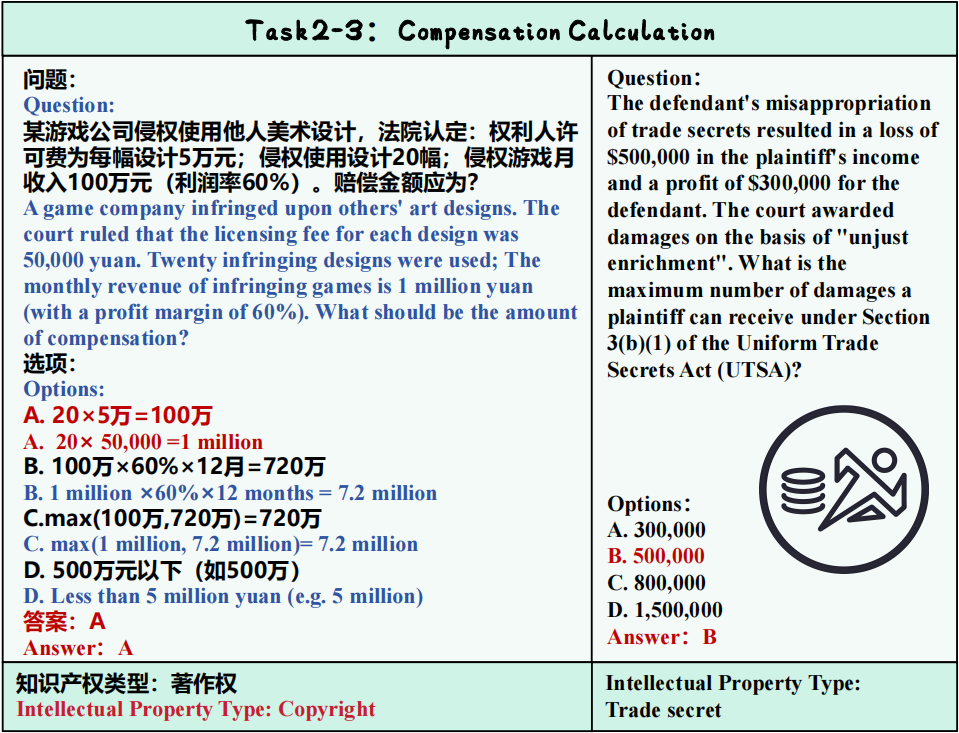

Task example of Task 2-3.

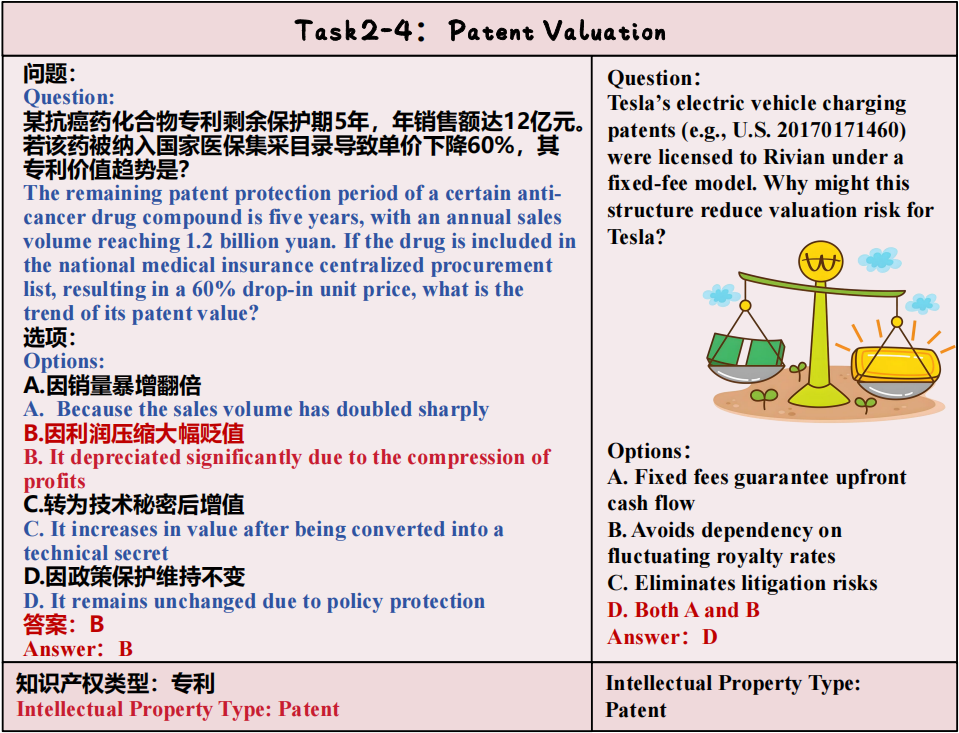

Task example of Task 2-4.

Task example of Task 2-5.

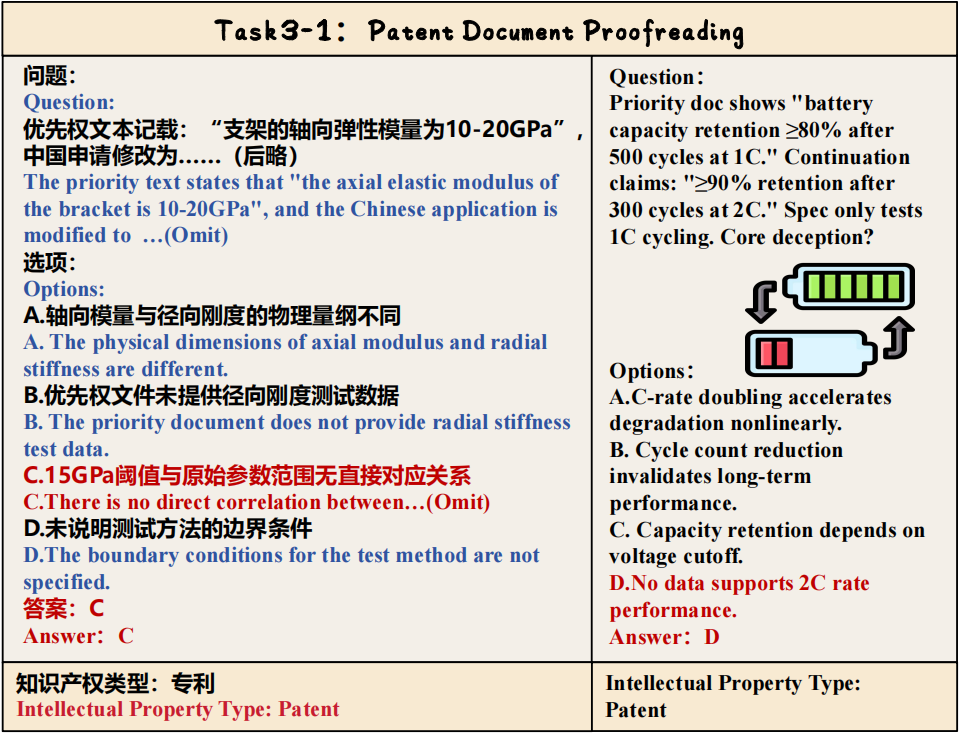

Task example of Task 3-1.

Task example of Task 3-2.

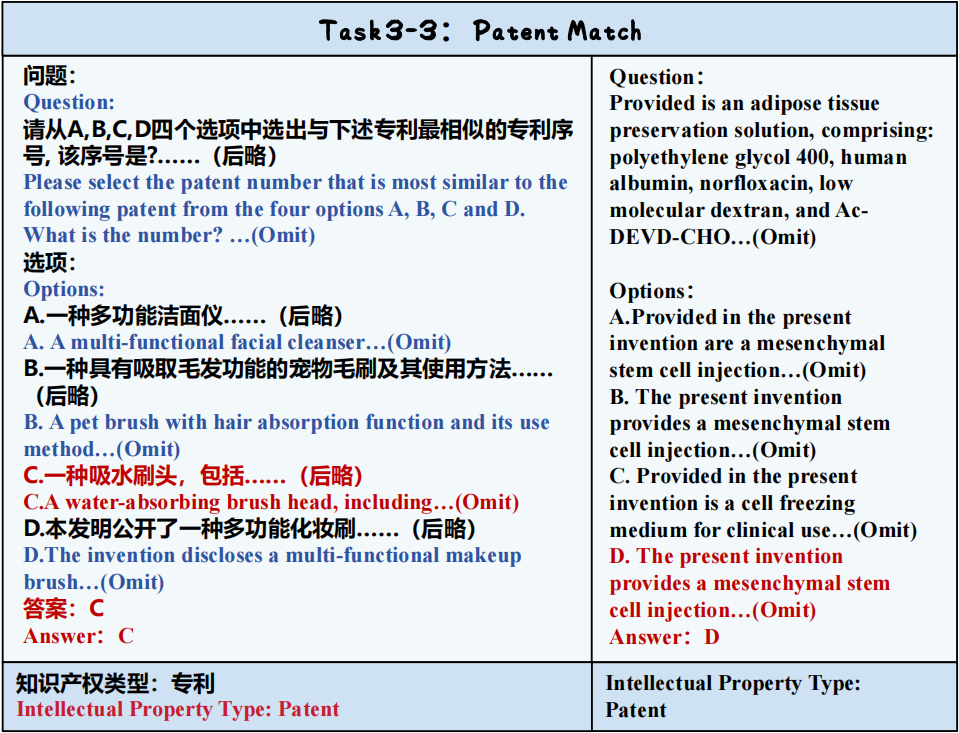

Task example of Task 3-3.

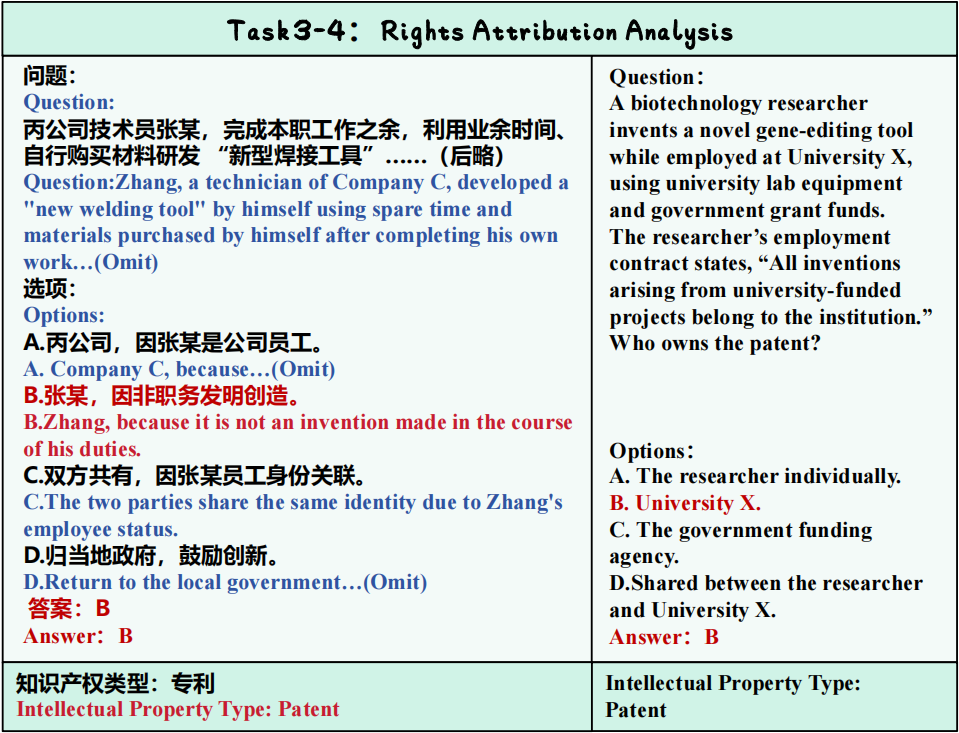

Task example of Task 3-4.

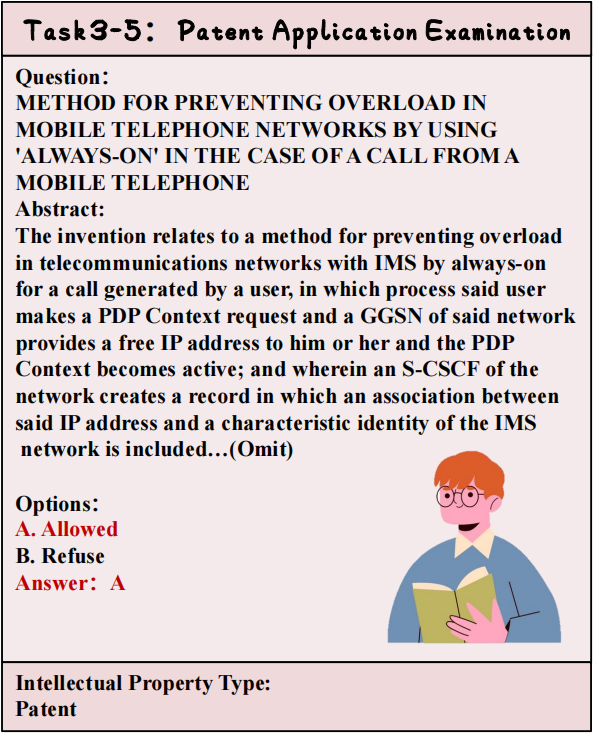

Task example of Task 3-5.

Task example of Task 4-1.

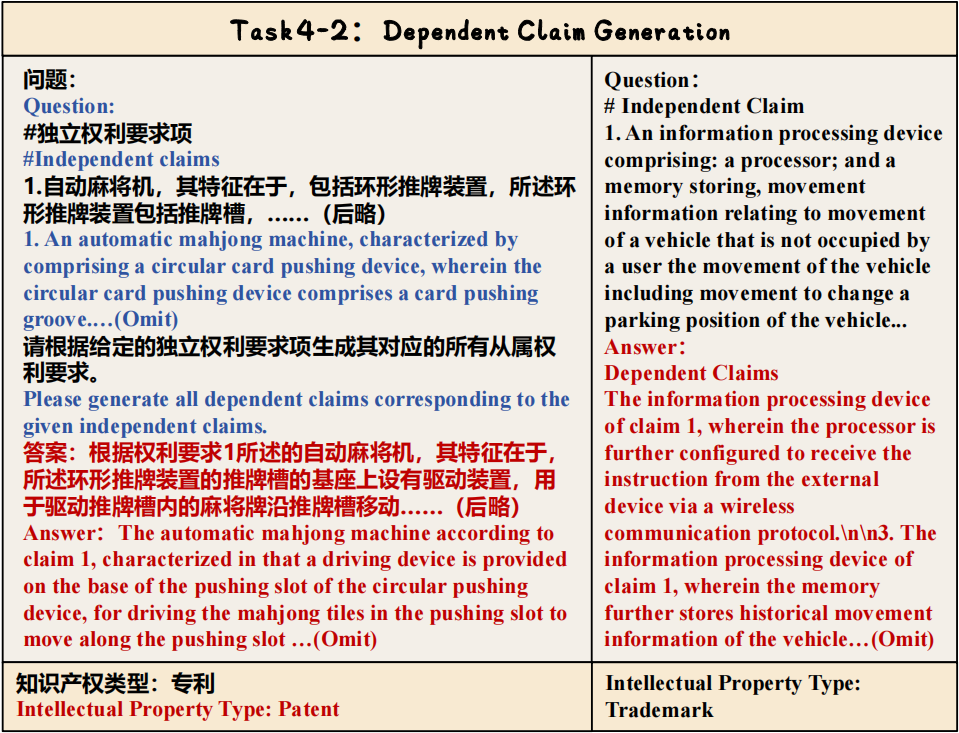

Task example of Task 4-2.

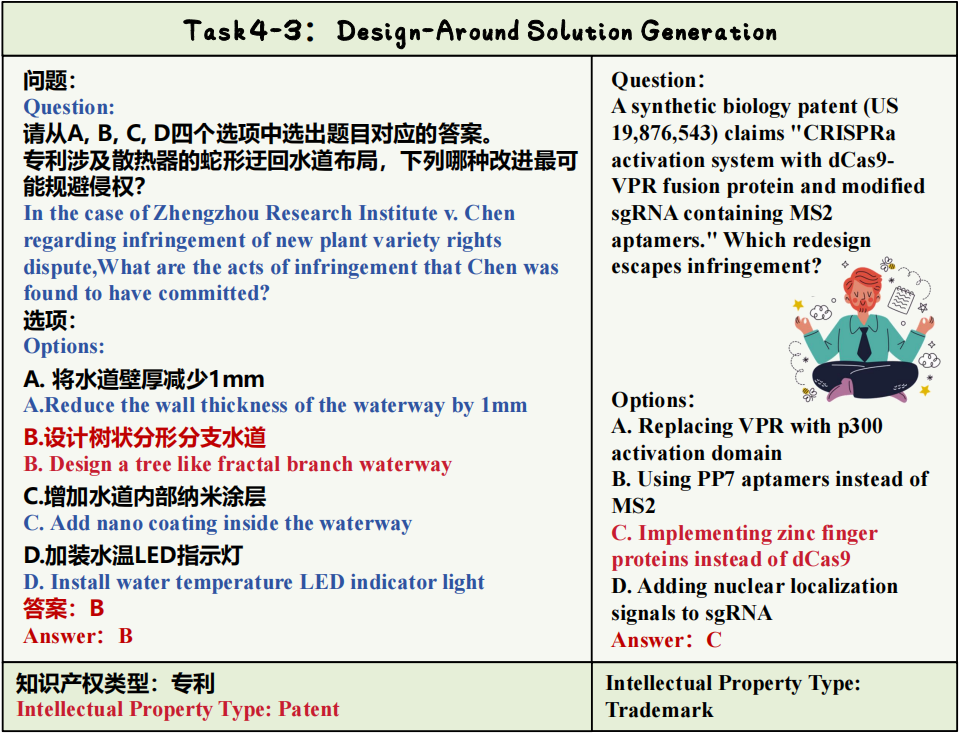

Task example of Task 4-3.

We evaluated the capabilities of 16 advanced large language models, including 13 general-purpose models, 2 legal-domain models, and 1 intellectual property (IP)-domain model. The general-purpose models include chat models such as GPT-4o, DeepSeek-V3, Qwen, Llama, Gemma, and Mistral, as well as reasoning-oriented models represented by DeepSeek-R1 and QwQ. Each model was tested under five different settings: zero-shot, one-shot, two-shot, three-shot, and Chain-of-Thought. Detailed experimental parameters can be found in the paper.

Click on different buttons to view the results under various settings.

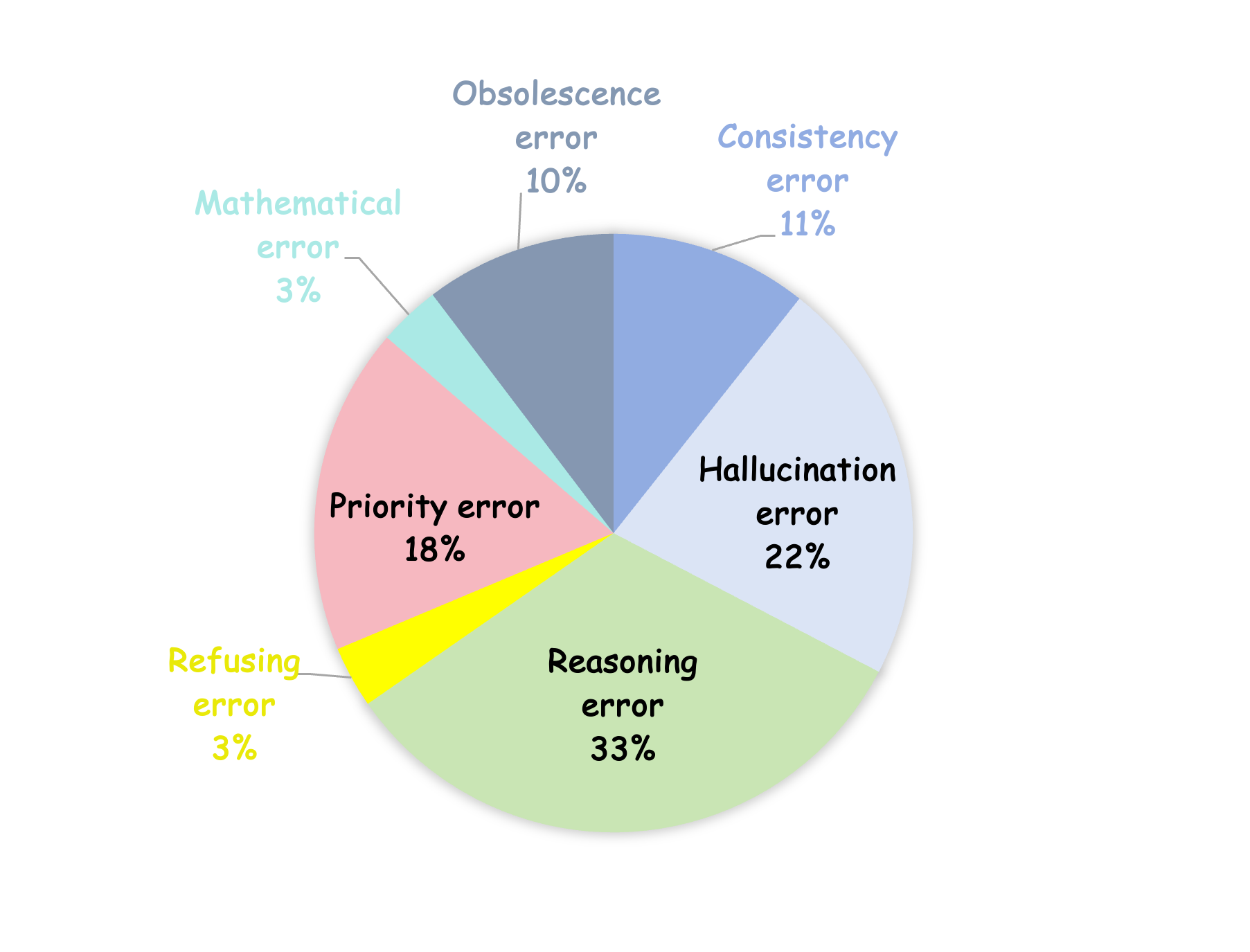

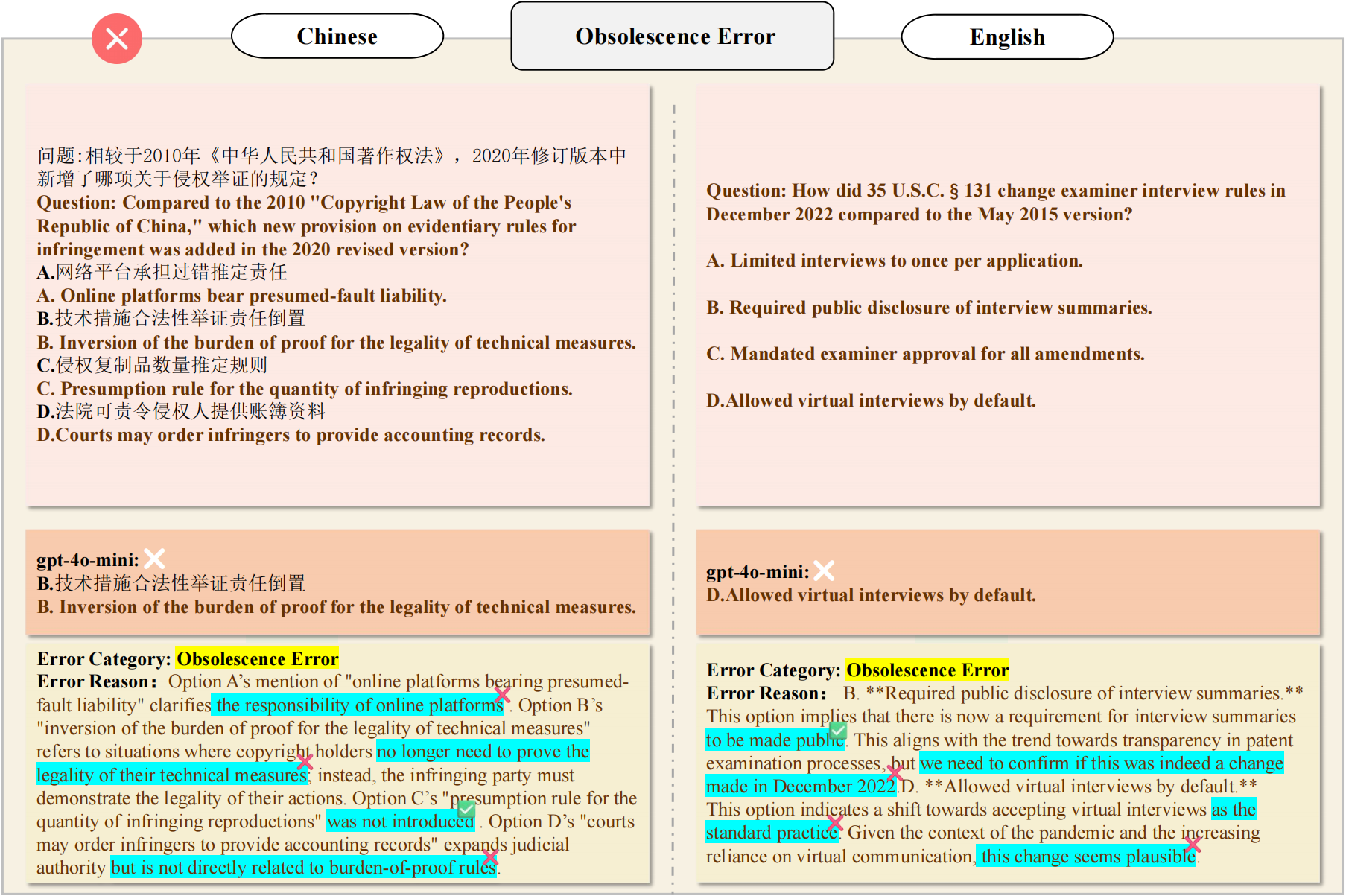

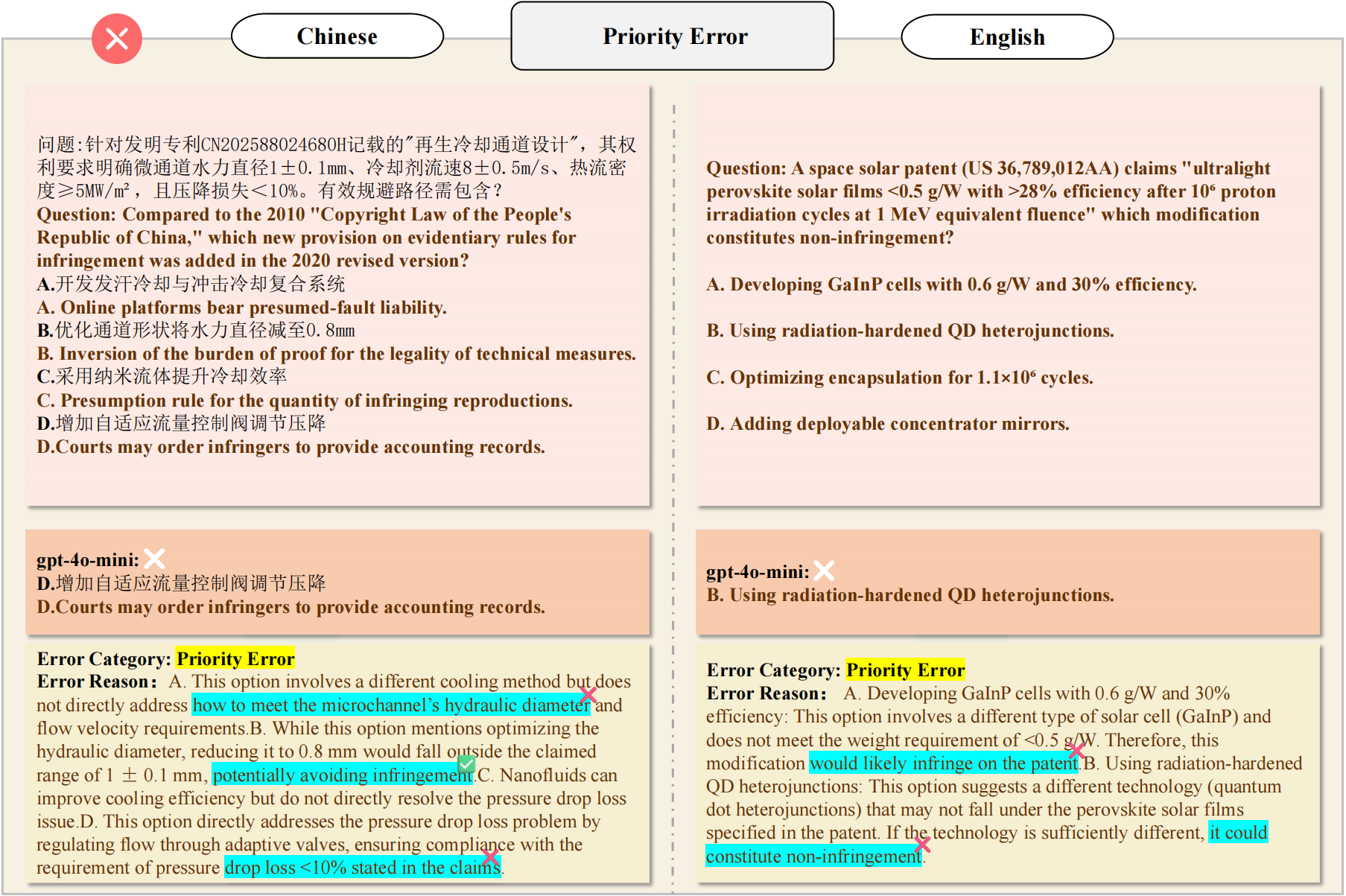

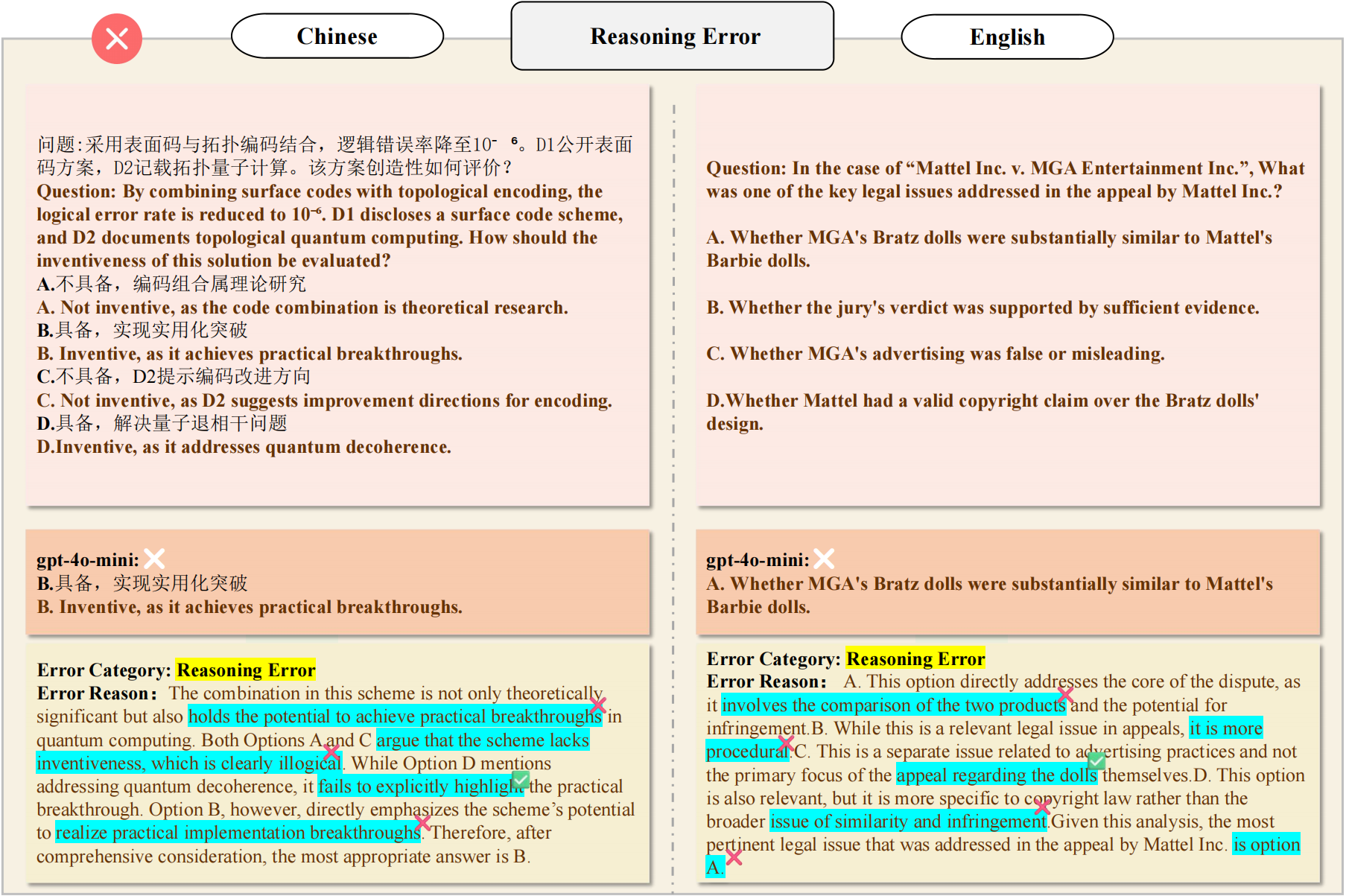

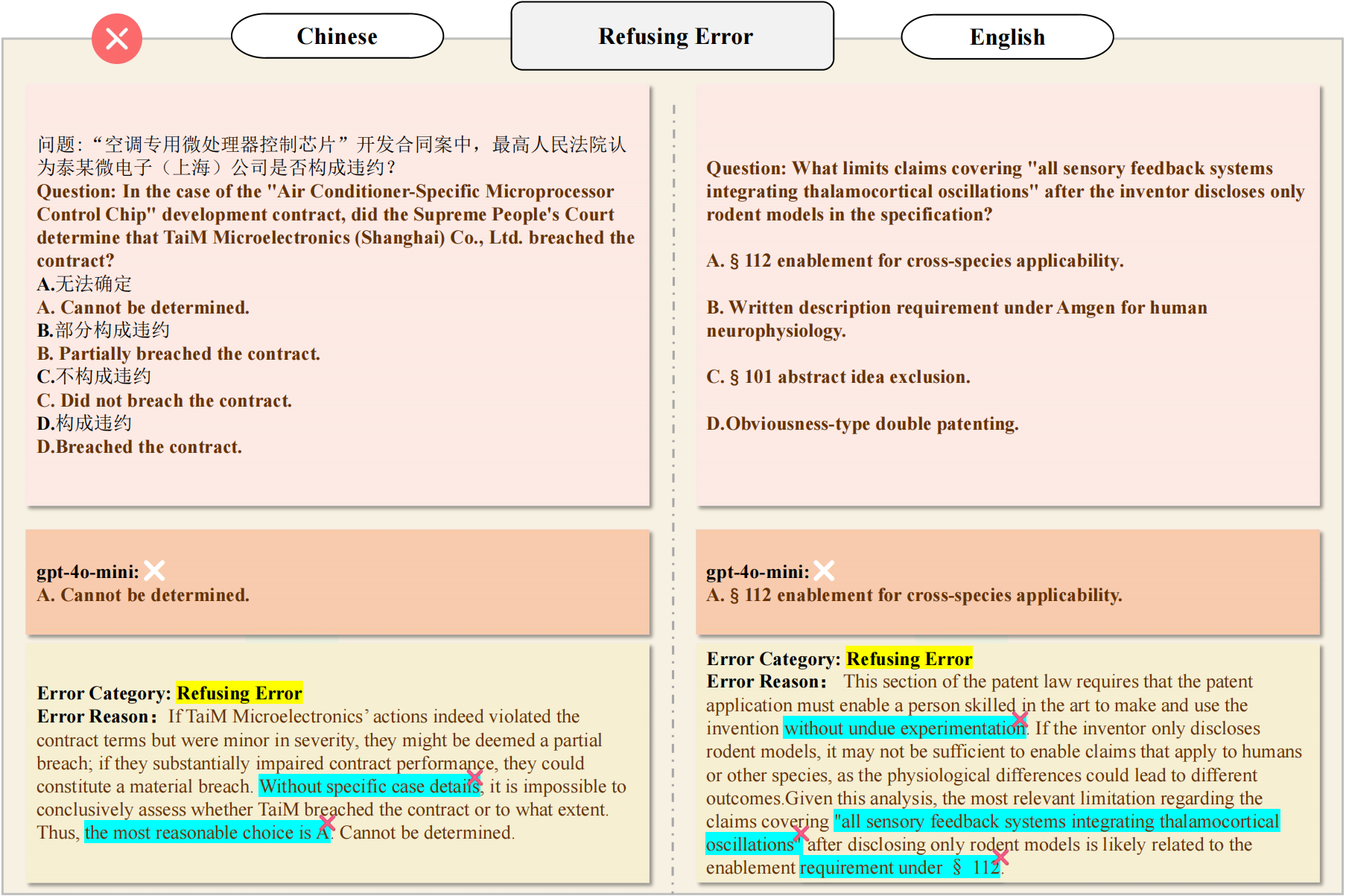

We randomly selected 300 incorrect responses across all tasks from GPT-4o-mini under the CoT setting for error analysis. We classify the error into 7 types: Consistency error, Hallucination error, Reasoning error, Refusing error, Priority error, Mathematical error and Obsolescence error, where Reasoning Error is the most common error type, accounting for 33%.

Error distribution over 300 annotated GPT-4o-mini errors.

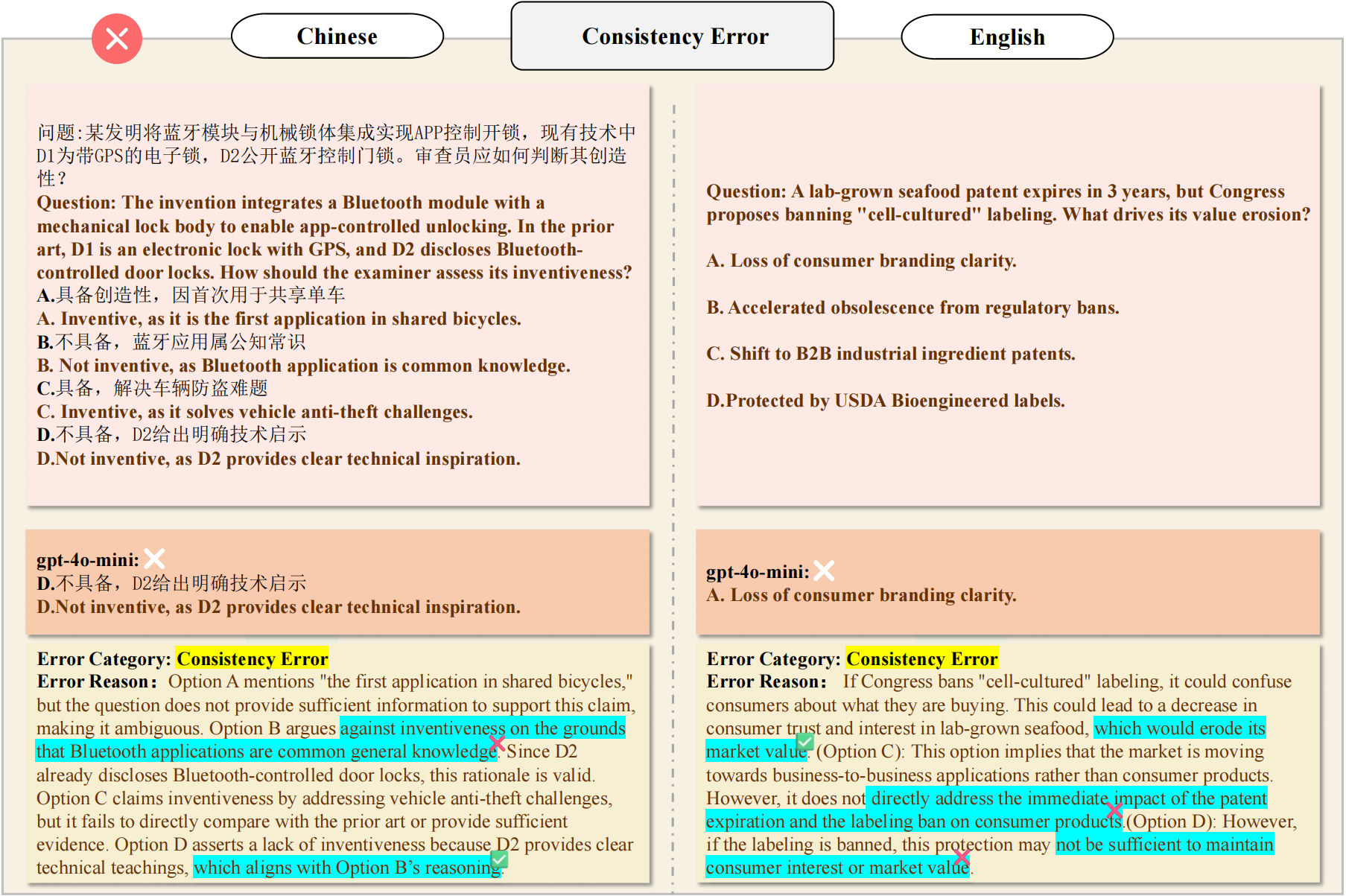

Consistency error case study.

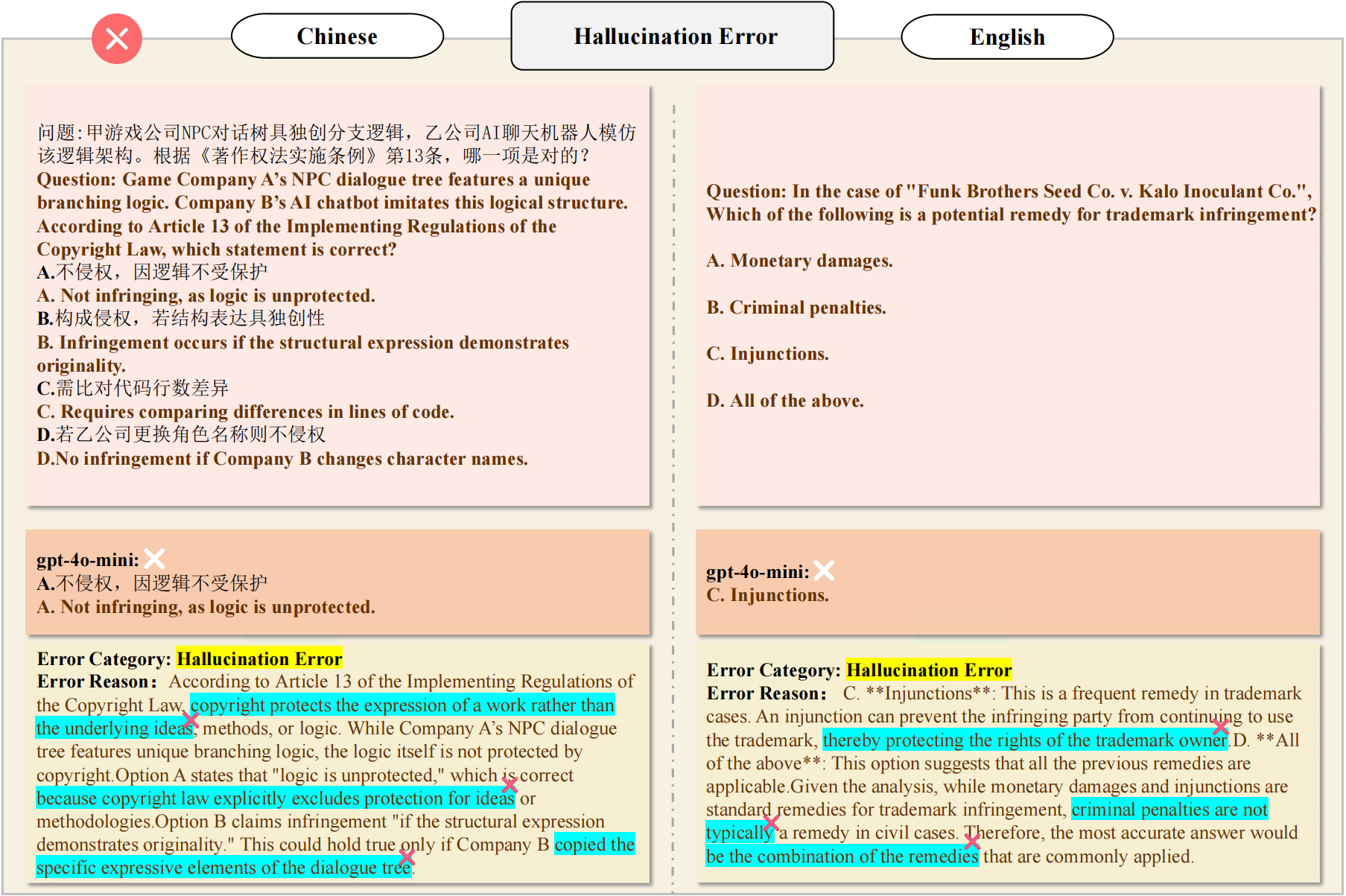

Hallucination error case study.

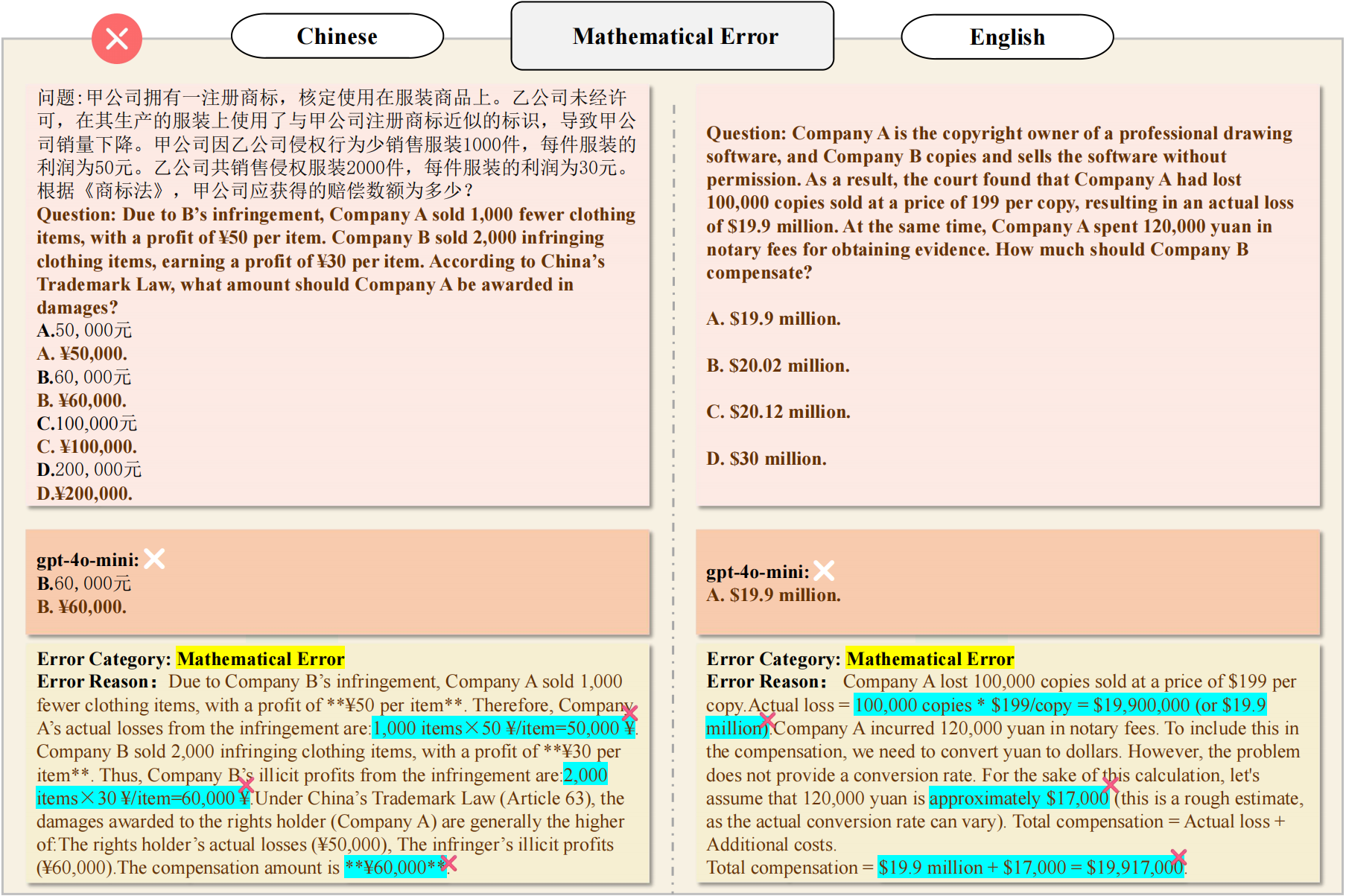

Mathematical error case study.

Obsolescence error case study.

Priority error case study.

Reasoning error case study.

Refusing error case study.

comming...